Doug Cutting and Mike Cafarella two well know computer scientists created Hadoop in 2006. They got this inspiration from Google’s MapReduce a software framework that breaks down the application into numerous small parts. These parts can be run on any node in the cluster. Hadoop after years of development became publicly available in 2012 sponsored by Apache Software Foundation.

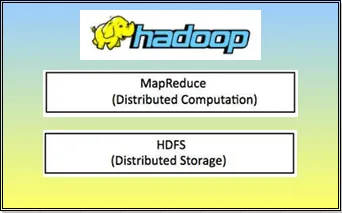

Hadoop is an open-source software framework to store data and process this data on clusters. It provides massive storage for any kind of data, huge processing power and the ability to handle limitless parallel tasks or jobs. It is enormously popular and there are two fundamental things to understand here - how it stores data and how it processes data.

Hadoop can store data in a cluster system- through HDFS (Hadoop Distributed File System) which is a part of hadoop. Imagine a file that was larger than your pc’s capacity. Hadoop lets you store files bigger than what can be stored in one particular server or node. It lets you store many files with large volume of data. And there are multiple nodes/servers out there. For example the internet giant like Yahoo uses hadoop which uses thousands of nodes for its operation.

The second characteristic of hadoop is its ability to process data through Map Reduce in a unique way. It processes all the data on the nodes. The traditional method takes longer time to process huge data sets. But in case of Hadoop, it moves the processing software to where the data is and it distributes the processing through a technique called mapping thus reducing the answer likewise and in less time.

Why is Hadoop important in handling Big Data?

-

The tools that Hadoop uses are often on the same servers where the data is located which results into faster data processing.

-

Hadoop provides a cost effective storage solution for business.

-

Hadoop is fault tolerant i.e. when data is sent to an individual node, that data is also replicated to other nodes in the cluster resulting in providing proper backup in case of any event failure.

-

Hadoop provides a scalable storage platform for business.

-

Hadoop not only has this but it also provides an affordable platform to store all the company’s data for future use.

-

Hadoop is widely used across industries like finance, media and entertainment, government, healthcare, information services, retail etc

Hadoop is developed to a point that it can successfully build large, complex applications. Hadoop also overcome the scalability requirements for handling large sets of data of varied type.

Big vendors are positioning themselves on this technology. Some of the examples are:

-

Oracle has got BigData Machine ; this server is dedicated to storage and usage of non-structured content.

-

IBM built BigInsights that acquired many niche actors in the analytical and big data market.

-

Informatica built a tool called HParser , this launches Informatica process in a MapReduce mode, distributed on Hadoop servers.

-

Microsoft has Hadoop backed by Apache for Microsoft Windows and Azure.

-

Some large database solutions like HP Vertica, EMC Greenplum, Teradata Aster Data or SAP Sybase IQ can directly get connected to HDFS.